At Blackbuck we match customers with truck suppliers in real time. For every shipment we do a central verification of compliance documents like truck registration certificate, fitness certificate, insurance driver licenses, tax account number. The documents are uploaded through our app from across the country. A lot of these locations are rural where network connectivity is poor and a variety of devices are used. Most of the users are connected through 2G/Edge, 3G (HSPA). After evaluating the available libraries for document upload from Android devices, we found many gaps which weren’t suitable for continuous image upload. The purpose of this blog is to publish the findings based on couple of years of research in optimising out document uploads.

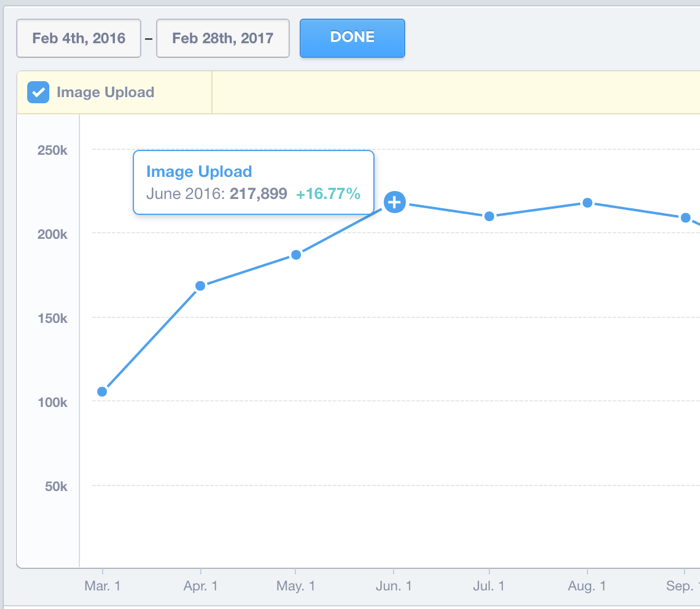

To give the gist of volume, take below image as an example:

On an average, we have over 2 hundred thousand image uploads every month. How do we ensure that every image/document uploaded from Android device reaches our server reliably and without any problem/bad experience to the app user? What are the intricacies of Android OS for upload? Are there any bugs in Amazon S3 Android APIs that we need to take care? Are there problems dealing with S3 server? These are some of the questions we will answer and also show how at BlackBuck we have managed to build high performance upload architecture around all these pain points.

Let’s consider Amazon S3 to begin with. S3 is known to many as modern day container dumps to store raw data. Provided by Amazon, S3 has lots of cool stuff to offer. Amazon has also provided S3 SDK for Android via which we can connect to our respective server & save data onto S3. At BlackBuck, we have found few problems in using this SDK.

- Firstly, each connection takes a lot of time (About 30secs in 2G network, 10–15 secs on 3G). Same is the case with disconnection. The sdk performs authentication and authorization which can take considerable time which becomes overhead for upload.

- There are problems in packet creation because of which many of the time, we get internal sizing issue — When more packets are sent/received than expected

- Most of the time “Network time skewed” error is observed. This can happen if S3 server thinks that client which is connecting to it has date & time too skewed from S3 server time. It does this mostly to prevent any malpractices. But more often than not, S3 sdk api has issues where even though time on the client system is proper, we still see this error. At BlackBuck we had to overcome this by asking users to uncheck & check “Automatic date & time” from Date & time settings. Once we did this, S3 sdk behaved properly for reasonable duration. (PS: This issue was observed even if user had already checked this option beforehand. Hence the Uncheck + Check).

- Every time upload pauses due to some connection issue, it has to begin all over again. Pause and re-try option is only available with multi-part uploads. (Here the issue described in #1 becomes overhead during manual retries)

- Multi-part upload is not available for document of sizes less than 50MB (at the time we used it , sdk had this limit), which is the case almost all the time in BlackBuck. Hence we cannot use this functionality.

- Considering poor connectivity in Edge/GPRS networks, connection to S3 used to frequently timeout and any attempt to change timeout from apis did not reflect accordingly. FYI below is sample config we used.

ClientConfiguration config = new ClientConfiguration();

config.setMaxErrorRetry(0);

config.setMaxConnections(2);

config.setSocketTimeout(60000);

config.setProtocol(Protocol.HTTP);

AmazonS3Client s3Client = new AmazonS3Client(credentials, config);

uploadTransferManger = new TransferUtility(s3Client, getApplicationContext());

7. S3 upload throttles the network. https://forums.aws.amazon.com/thread.jspa?threadID=149194.

Given these problems, we had to think of an alternative but at the same time couldn’t really let go of S3 as backend depended on S3 for document storage.

Now let’s look at some of the concerns from Android OS & its HTTP stack.

- Parallel Upload/Download seems impractical: When sockets are opened for data transfer, uploading the data throttles the network. This is apart from S3 throttling (due to S3 sdk). Although not as severe as S3 throttling, bandwidth allocation/manipulation is not flexible as one would think. We have tried this with both Volley & Retrofit, and found to be similar.

- Battery Optimization mode: Android has this mode where below certain percentage of battery power, optimization kicks in which limits network availability only to foreground app. This means while upload is going on and app is killed or goes into background, upload would not work. At BlackBuck, we have resolved this with combination of Alarm manager, foreground service and wake locks.

- Network Restriction mode: This is another restrictive mode available to users, where if app goes into background/killed, it’s not allowed to access network at all.

- There is also SSL exceptions which happens during handshake. One of the key observation we found is that when user’s date & time is reset and if its value is before the certificate issued date of your website/amazon s3, then this issue can happen. Hence date & time of the client (mobile in this case) is very important

Apart from Amazon S3 and Android OS there can also be issues from mobile vendors. For example, Samsung phones have Ultra power saving mode, Asus and Xiaomi phone have their own data & power saving modes along with auto-start manager. These modes can be very restrictive and detrimental if continuous/rigorous uploads are needed for business.

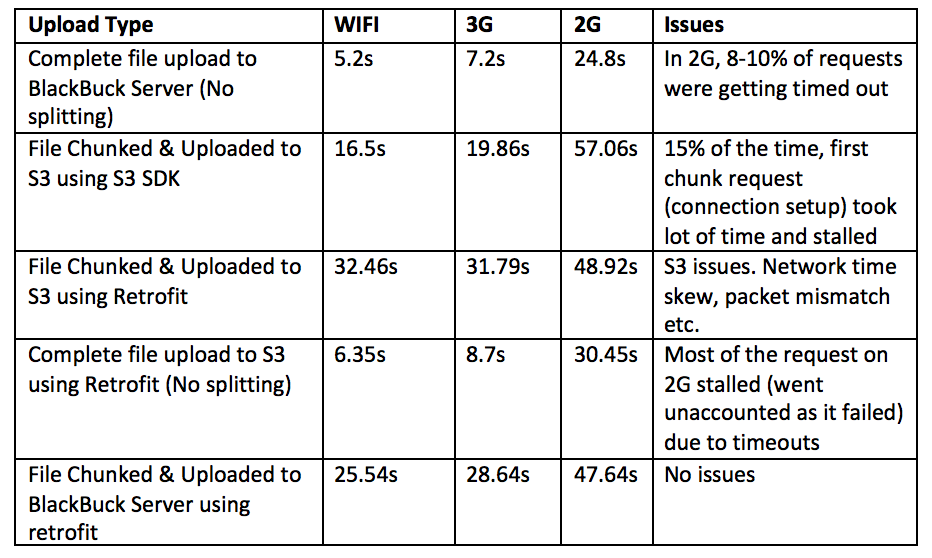

We carried out performance testing on various network types and on different modes of transfer. A 200KB file was chosen for upload. File Chunk size (wherever applicable) is 20KB. Following is its overview.

As you can see when we carried out our own file splitting, and then uploaded it using retrofit to BlackBuck server, no issues were observed. Furthermore, by tweaking the chunk size based on size of the file & type of network available we were able to achieve average upload time of 8secs, 17secs and 35 secs on WIFI, 3g & 2G respectively for the 200KB document (Ex: On WIFI chunk size in our library is configured to 0.5MB).

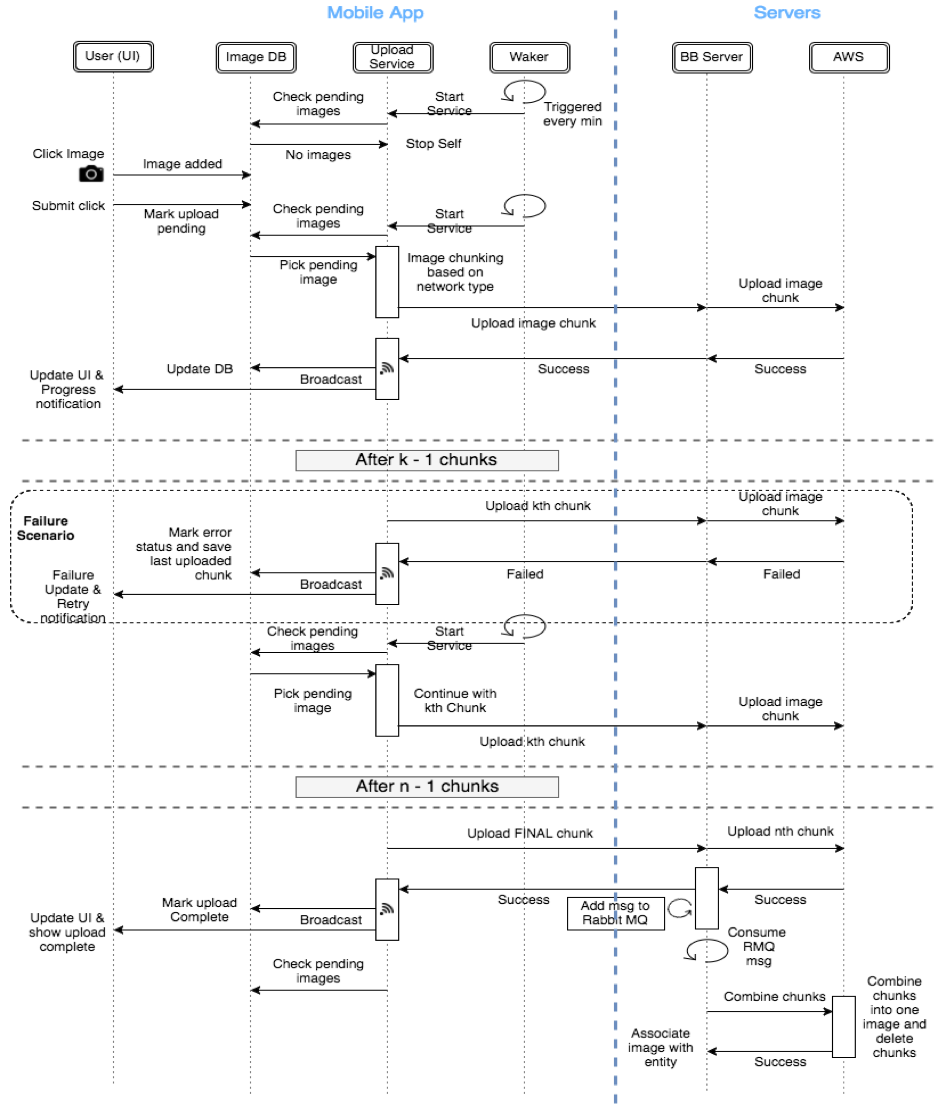

Based on above observations, we designed the following library so that data is directly uploaded to BlackBuck server first from mobile clients and then backend system saves it onto S3. Once we receive last chunk from client, backend system combines the chunks & puts the final image onto S3. The design of this library is shown below:

Image DB is the local DB stored in the mobile client & maintained within the library.

- Upload Service checks for any pending images in the DB and uploads them. Handles failure scenario too. Provides broadcast of update status to those who want to listen to them (Ex: Notification Service)

- Waker is Alarm manager which periodically brings up Upload service to check for pending images

- BB Server is the BlackBuck Server which takes care of saving to AWS S3. It also uses Rabbit MQ messaging system for creating task that will combine related chunks and create the complete file.

The document once captured by user is compressed if necessary (based on doc type and size). The final document is saved locally and its URI is updated in the local DB. The upload service then splits the file into multiple chunks. The chunk size depends on type of the document, its size and network type. The upload service also keeps track of any download/api calls or any other async upload happening within the app. This is done so that upload doesn’t interfere with normal app experience.

The upload service uses retrofit to upload the chunk to endpoint provided to it, in this case BlackBuck server. On success/failure corresponding info is updated onto the DB and local broadcast is sent to all listeners (for ex: In case user wants to update the progress/show notification). The service then continues onto the next chunk until last chunk of the file is sent. Incase any chunk upload fails, the document upload resumes with next chunk which is yet to be uploaded rather than starting from the first chunk of the file. Once last chunk is successfully uploaded, necessary broadcast is sent and DB is updated. It also has the provision to clear the entry from the DB at the same moment.

BlackBuck server then creates a task using Rabbit MQ messaging system which combines all these chunks and saves the complete file onto S3. Once this is done, the image will be visible from server for website users and downloadable again for any other mobile clients.

The system works even if app is killed (alarm manager takes care of re-starting the service) or if app goes into background. Furthermore, this system is highly useful for businesses which needs extensive document uploads from mobile clients. The above system has proven its mettle on various network types even in GPRS & EDGE networks and in several remote locations in India with poor network connectivity. It has given us reliable data transfer coupled with smooth UX for app users.

We have made the this library public! Here’s the link:

Github: https://github.com/BLACKBUCK-LABS/image-library

Please Note: This blog was originally posted by us here on 19 Jul 2017. There have been many updates to image library since then