Over the last few years, our servers at BlackBuck were distributed between various regions of AWS (Amazon Web Services), based on each of the POD's requirements.

Considering the dual benefit of achieving better latencies with S2S call and cost saving data transfer with the reusable common platform infrastructure, we decided to shift all of our infrastructure to a single region of the AWS.

MongoDB is one of the commonly used databases in BlackBuck for a variety of use cases (be it transactional, configurational and reporting). We needed to minimise the downtime in order to maintain business continuity as it's used in a few tier-1 applications.

This article will document the steps required to migrate your MongoDB cluster to a new AWS region without any downtime. At the end of this article, you’ll have MongoDB migrated to a new region. Without further delay, let’s start.

Problem Statement

- The standard way to migrate data with MongoDB was to use the traditional dump and restore method which would have required a few minutes to hours of downtime depending on the data size

- Since we are processing critical customers and order data continuously from mongoDb we cannot afford a long downtime. Hence, we came up with a solution where we are maintaining continuous sync between old and new cluster data

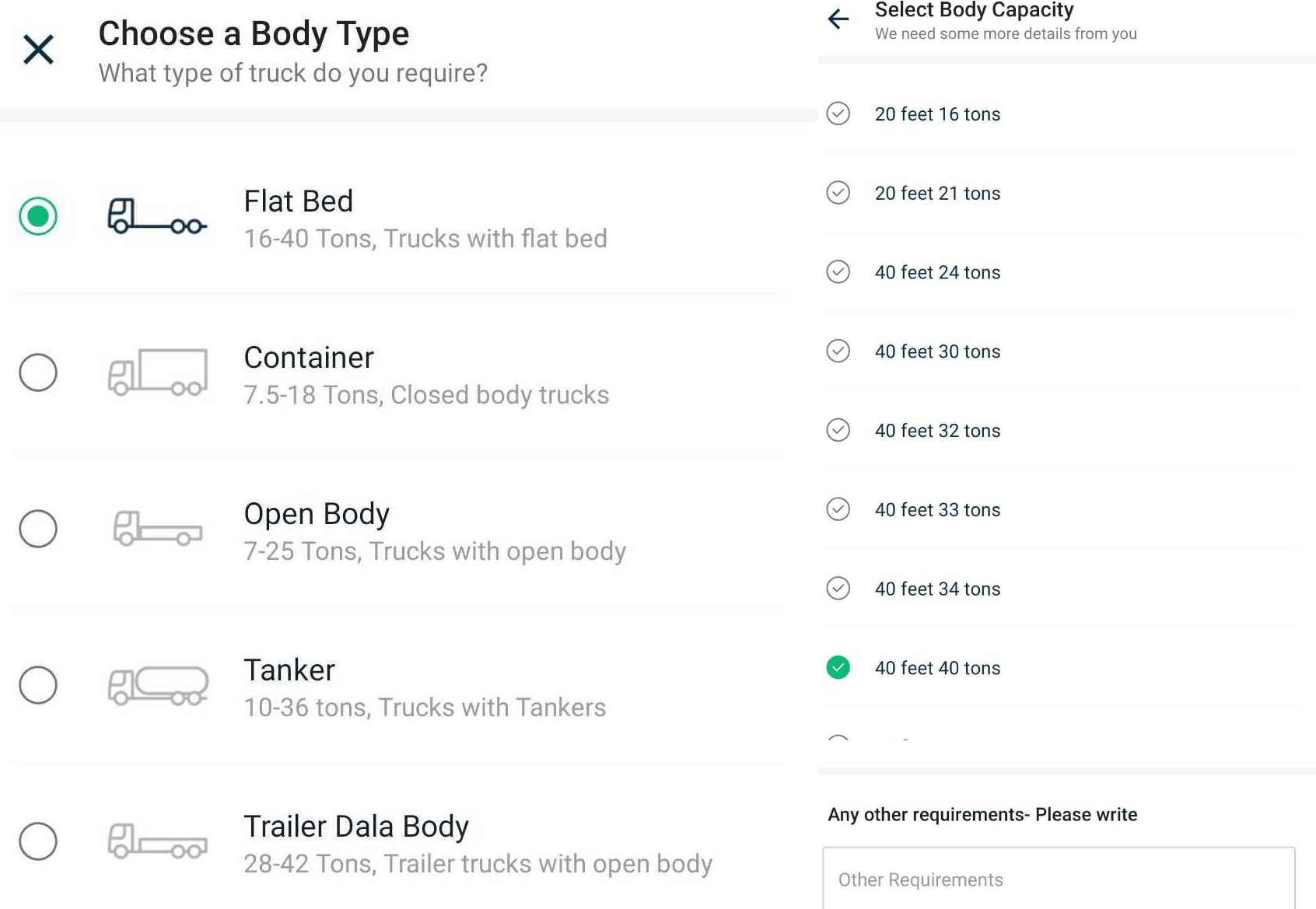

- Below are the snapshots of business critical data rendering from mongo cluster

Prerequisite

- Mongo should be running with replica-set

- Both clusters should belong to the same VPC or VPC peering needs to be set up in order for each mongoDb (old and new cluster) instances to be discovered by each other

Solution

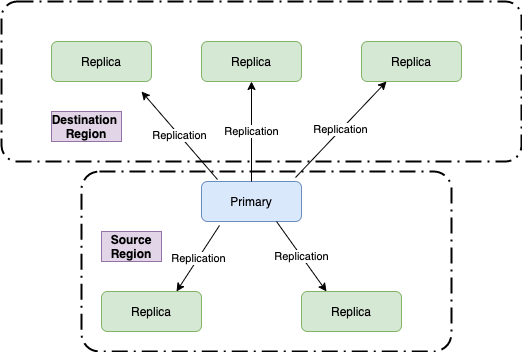

- Add new instances of destination region into existing cluster replica-set as new replicas

- Update DNS entries

- ReConfigure replica-set to ensure new primary get's elected from destination region

- Remove old replica from replica-set

- Initiate leader election to automatically migrate primary node from source region to destination region

Step by step migration

Add new replicas (destination region)

- Create AMI for any mongo instance (replica) and copy it to the destination region

- Launch n (same number of instances as source region) instances using AMI

- Update bindIp of new launch instances to use private Ip of instance and restart new mongo instances

--bindIp: It is the IP address that MongoDB binds to listen for connections from different applications

Note : This Step is optional if you are using bindIpAll : true config or you are setting mongo instance manually instead of AWS AMI.

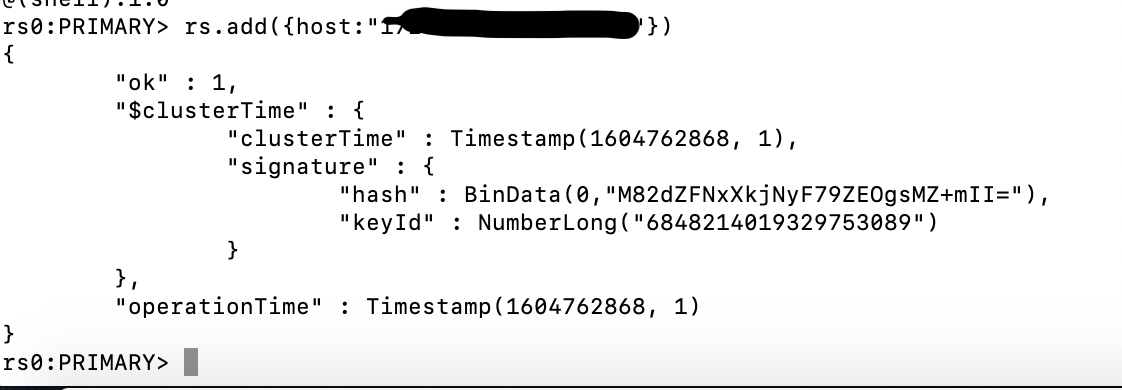

- Login to the primary instance and add all new region instances as a replica

rs.add( { host: "mongodbd4.example.net:27017", priority: 0, votes:0 } )

Note : As per mongo documentation, replica-set can trigger a leader election in response to add replica events hence, we should configure the new members to be excluded from replica-set leader election during adding as well as synchronisation stage by setting vote and priority equal to Zero for all new instances

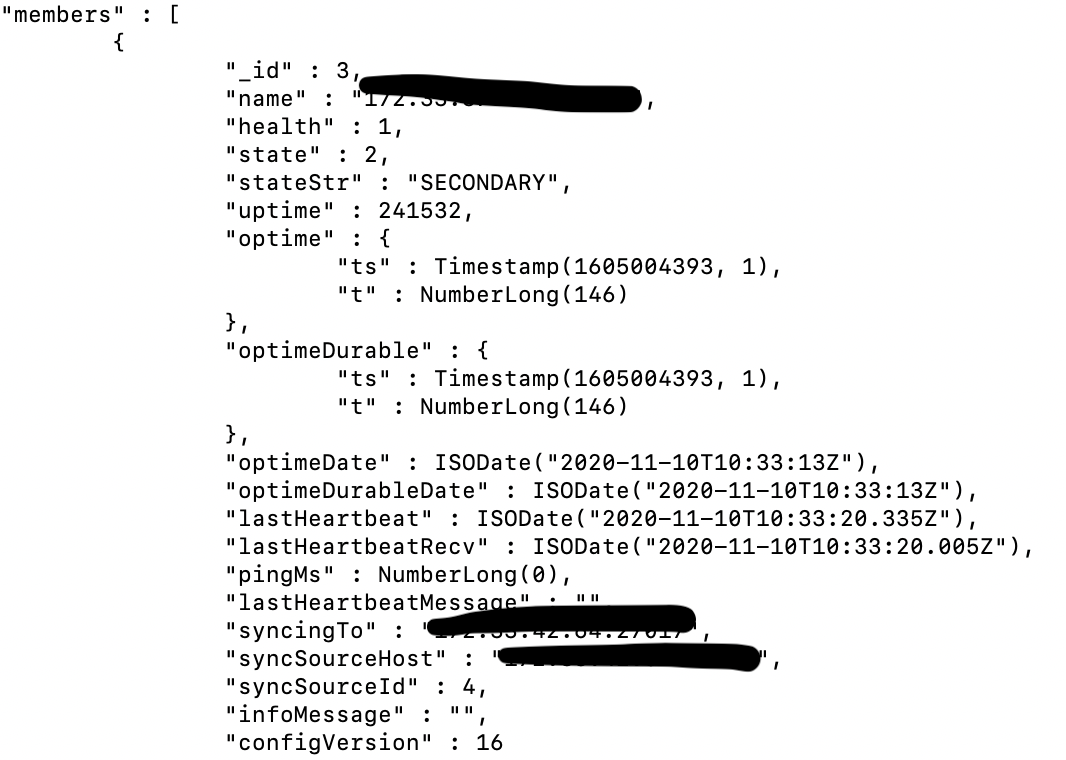

- Wait until all new region replicas get sync with the primary or in other words wait until stateStr becomes SECONDARY state for all new region instances

rs.status()

After adding new instance as replica

Update DNS records

- Update route53 host to point to new instance (n instances of destination region). To be on a safer side, redeploy all client applications using mongoDb to refresh DNS entries in case of any caching.

Note: We are using private Ip’s (all instances belonging to the same vpc or vpc peering is setUp) for internal communication and DNS hostname will be used by clients for connecting with mongo clusters.

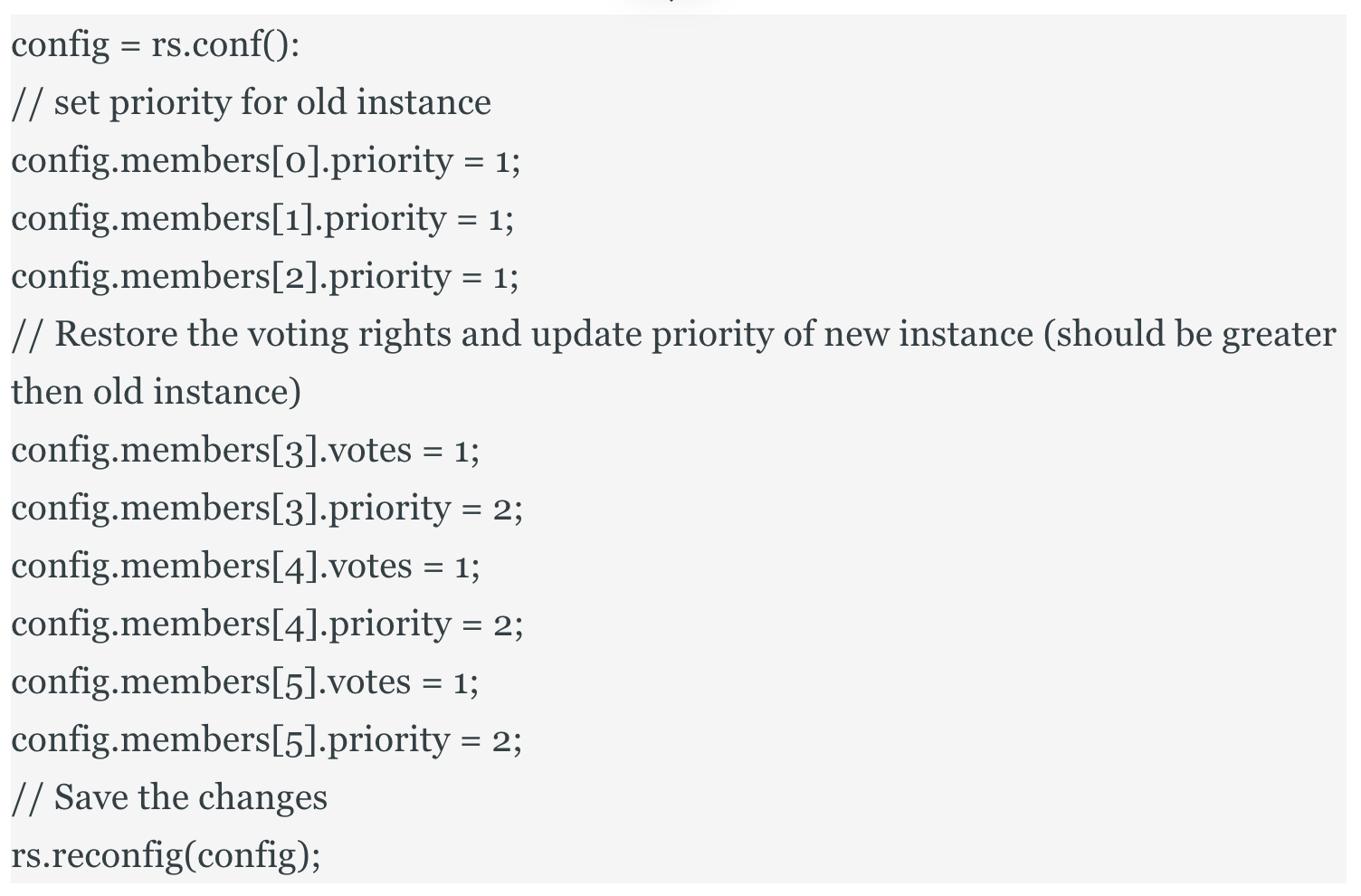

Reconfiguring replica set

- In case of primary stepDown(), we need to reconfigure priority and vote for replica-set to ensure primary get’s elected from destination cluster

Note: In order to ensure leader get's elected from destination region, we will be setting new region replicas priority higher than old region and give voting rights to new replicas

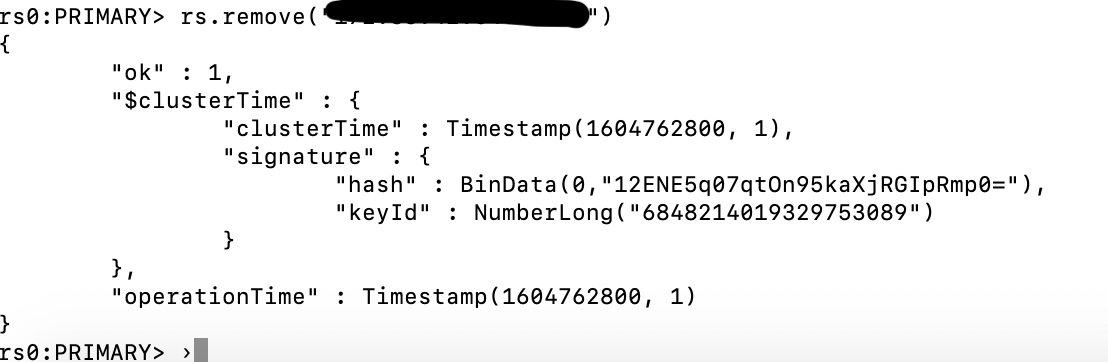

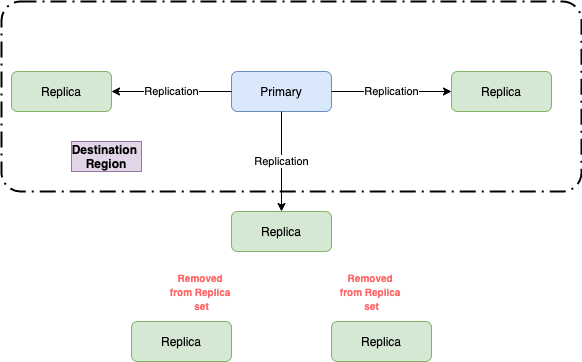

Remove existing region replicas

- Remove replicas of the source region one by one until only primary left.

rs.remove(hostname)

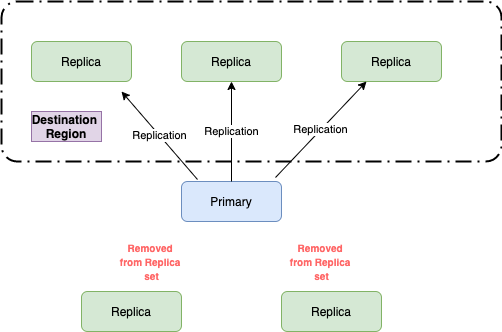

After removing replica instance from existing region

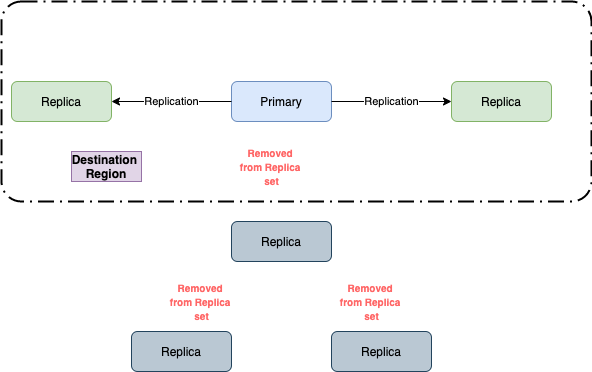

Initiate leader election

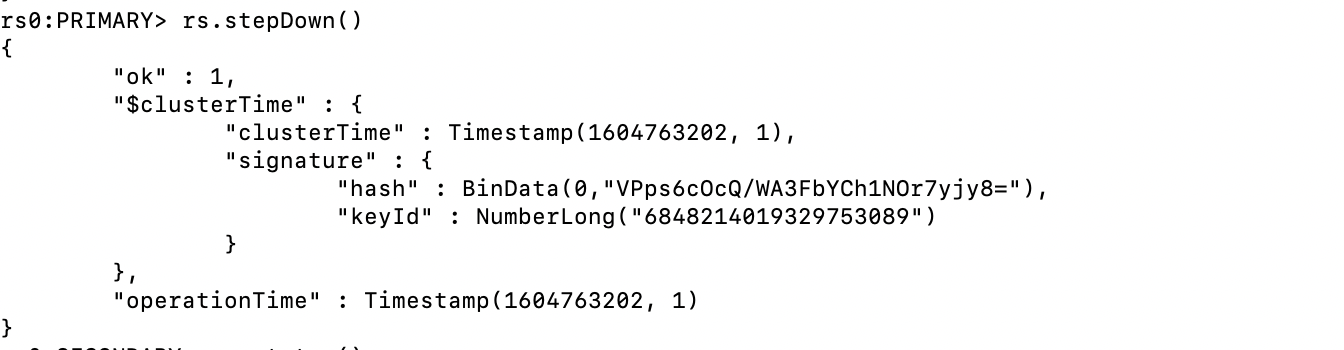

- Stepping Down current primary to elect new primary in destination region

rs.stepDown(stepDownSecs, secondaryCatchUpPeriodSecs)

Above command instructs the primary of the replica set to become a secondary. After the primary steps down, eligible secondaries will hold an election for primary. This will ensure that existing primary will become secondary and the primary will be elected in the new region.

-- stepDownSecs : The number of seconds to step down the primary, during which time the step down member is ineligible for becoming primary. If you specify a non-numeric value, the command uses 60 seconds.

--secondaryCatchUpPeriodSecs: The number of seconds that mongod will wait for an electable secondary to catch up to the primary. Default is 10 sec.

After leader election

- Remove the remaining replica in the source region

Final stage

Take-Away!

- MongoDb clusters can indeed be migrated to another region without downtime. However the replica set cannot process write operations until the election completes successfully. The median time before a cluster elects a new primary should not typically exceed 12 seconds. Your application connection logic should include tolerance for automatic fail overs and the subsequent leader elections

- If you are using MongoDb > 4.2, you will get retryable writes by default. Refer Doc for more info

- The replica set can continue to serve read queries during leader election if such queries are configured to run on secondaries

References

- How to add replica in mongo cluster

- How to remove replica from mongo cluster

- How to initiate leader election

- How to configure replica set

- How to check if replica is in sync with primary